Lecture 8 Deep Learning Software

Dl.cs231n.lecture| 23 Oct 2018

Tags:

DeepLearning

CS231n

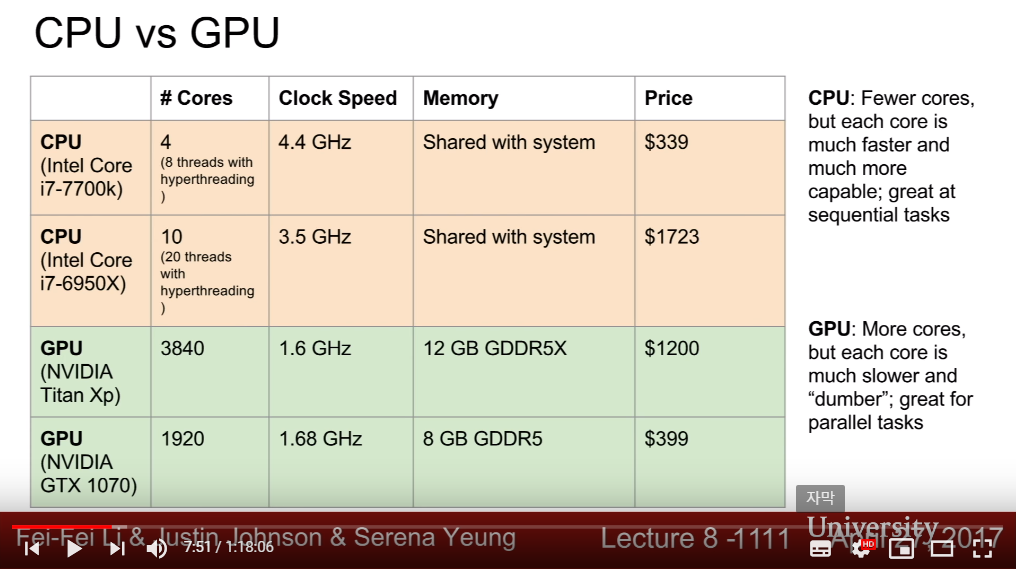

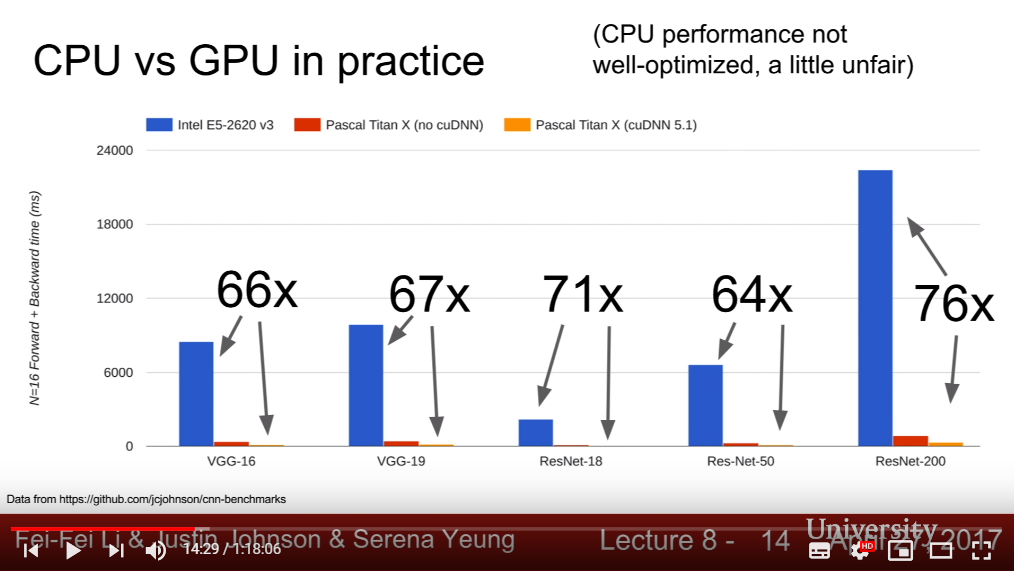

- GPU works much faster in massive parallel problems, because of its # of cores and straightforward structure(CPU matters more on capability and sequential task control)

- We could program GPUs by using CUDA(NVIDIA!)(and Higher level APIs cuBLAS, cuFFT, cuDNN), OpenCL(available in AMD, but slower than CUDA)

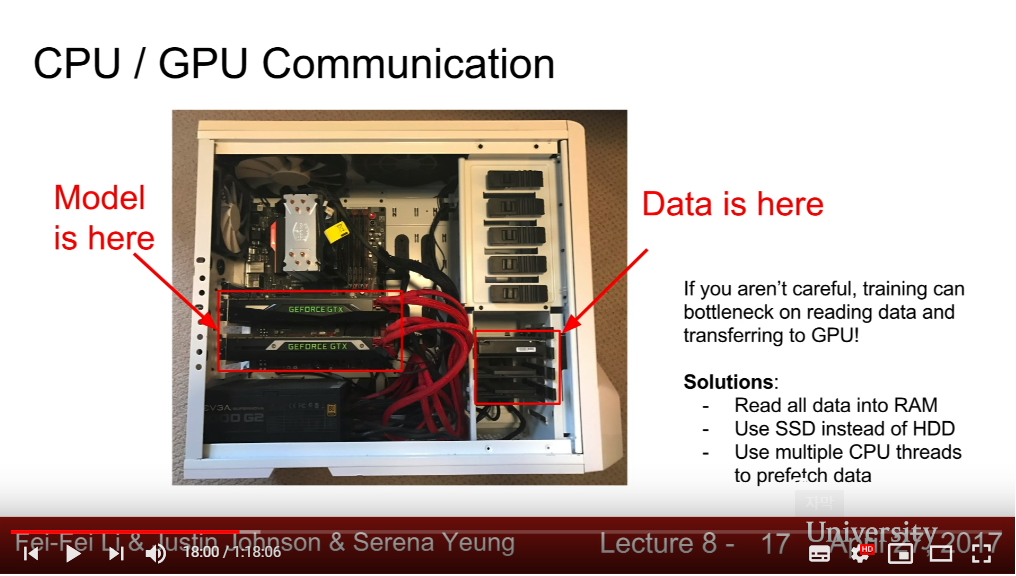

- There might be a bottleneck in reading data and transferring to GPU. Using RAM or SSD HD, using multiple CPU threads to IO could be a solution.

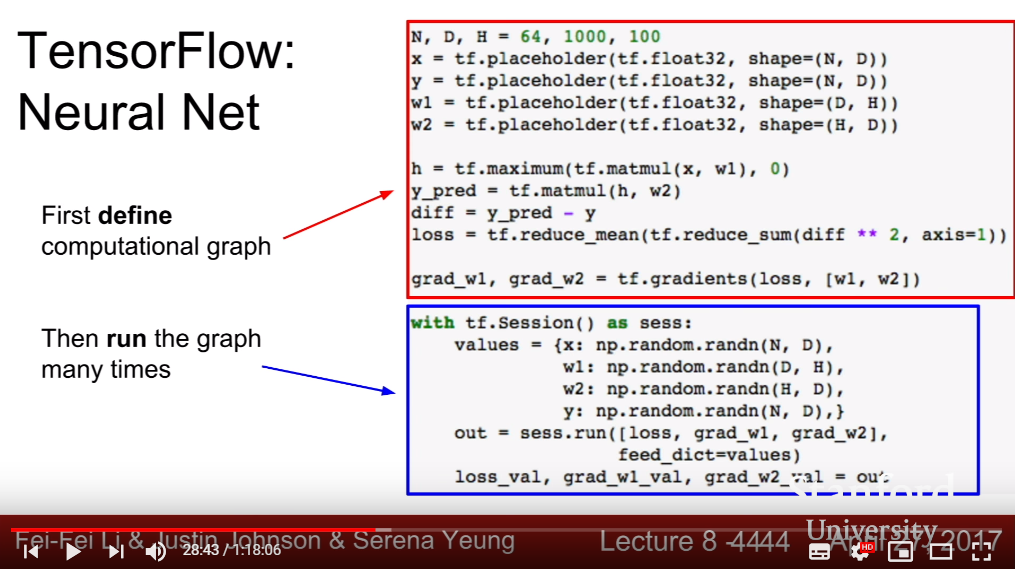

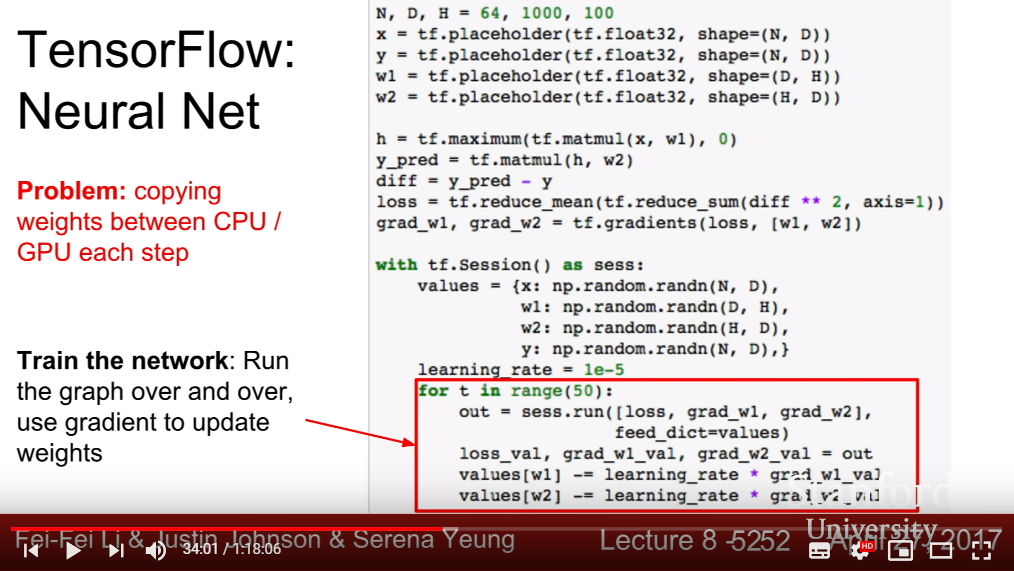

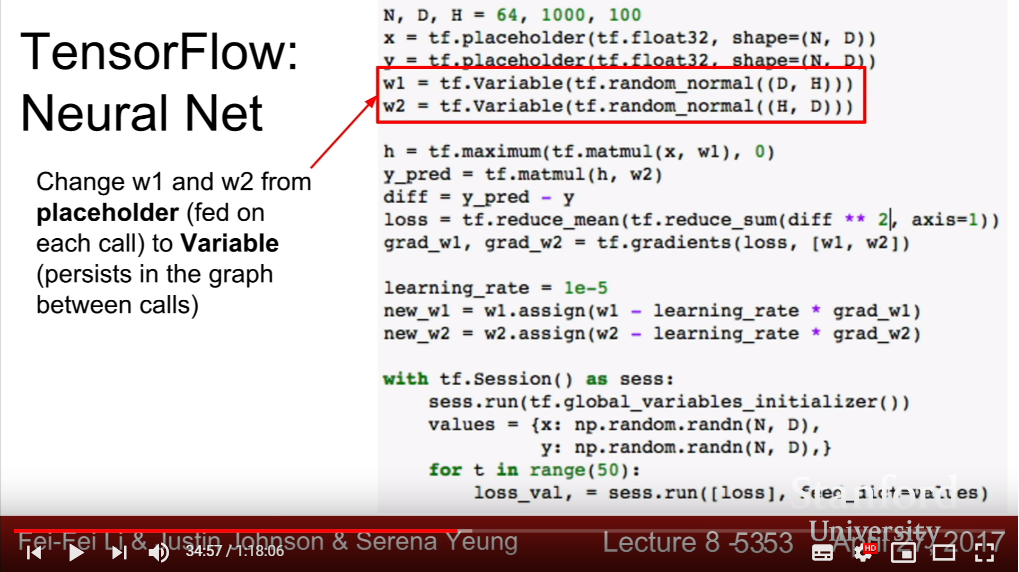

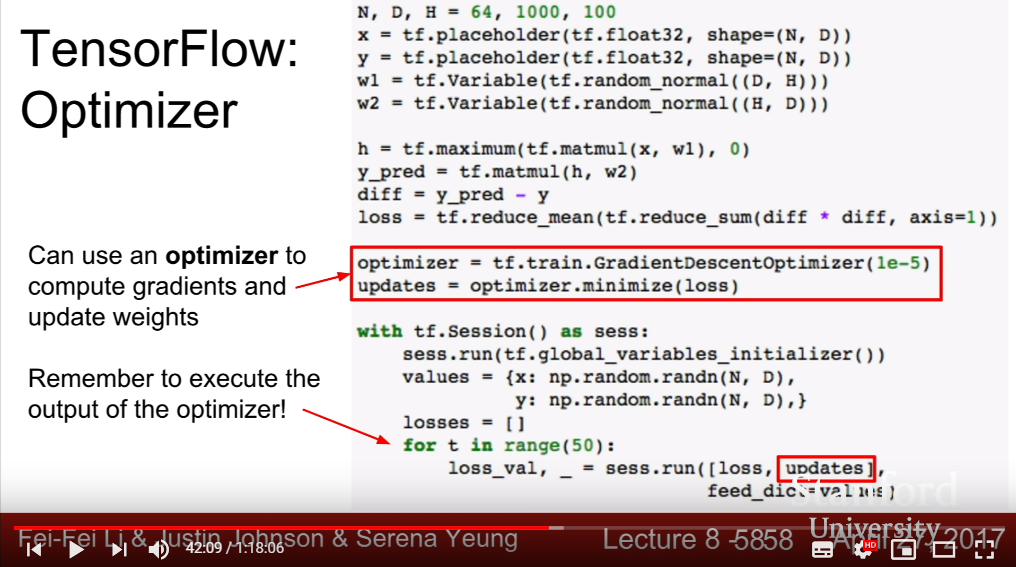

- Utilities of Deep Learning Libraries

- Easily build big computational graphs

- Easily compute gradients in computational graphs

- Run it efficiently on GPU(cuDNN, cuBLAS or etc)

- Goal of Deep Learning Libraries

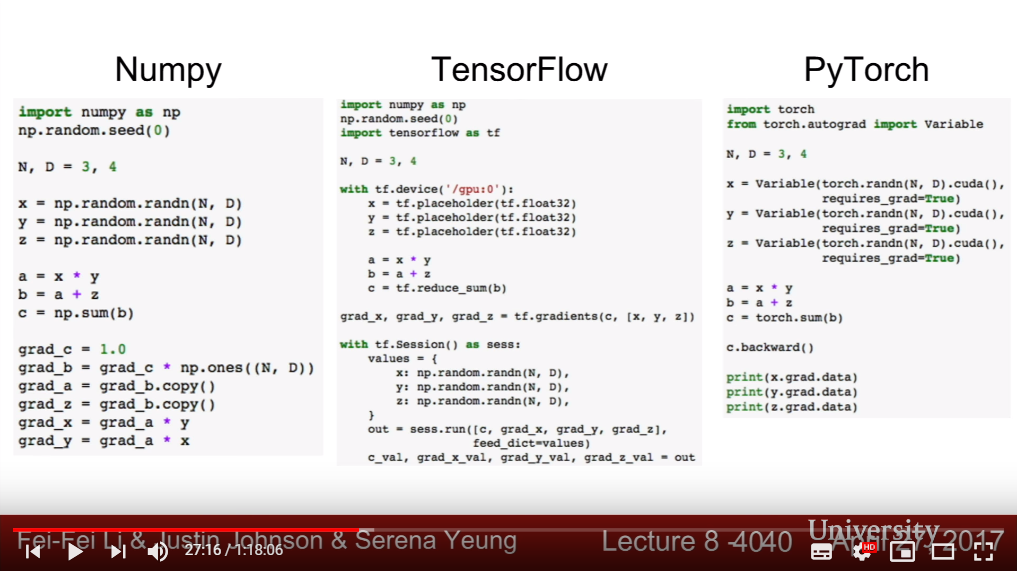

- Compute as easy as Numpy(or others), and gives gradient function and GPU utilities without struggle.

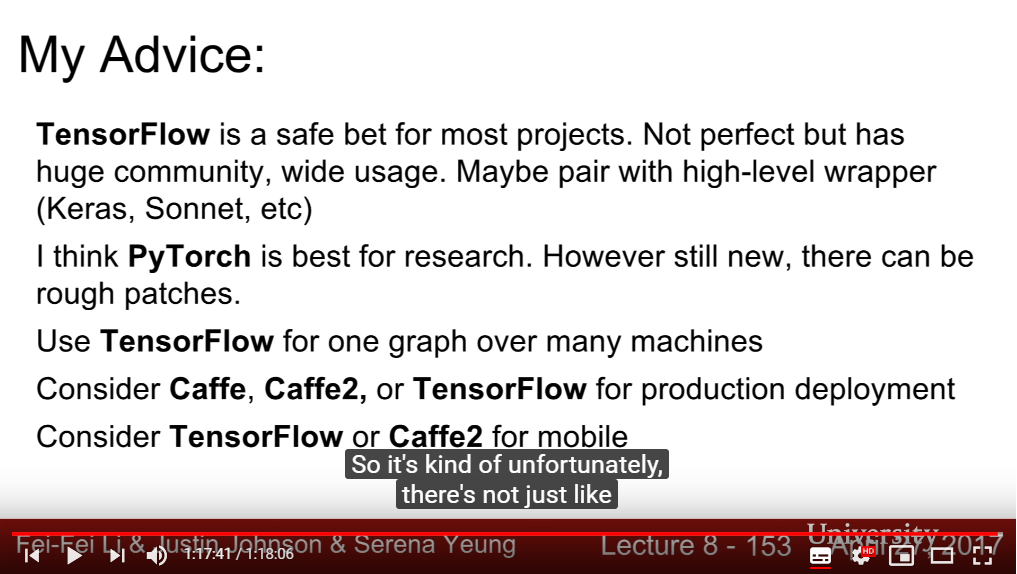

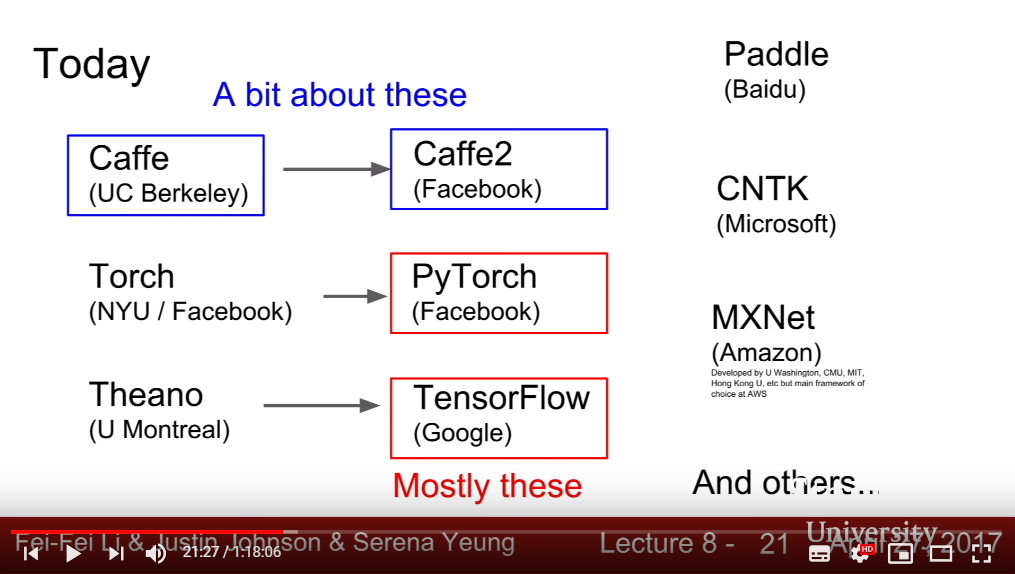

- Trends of Deep Learning Libraries: the framework varies, TensorFlow is leading the trend, industries and big companies are supporting the libraries, which was made in academias.

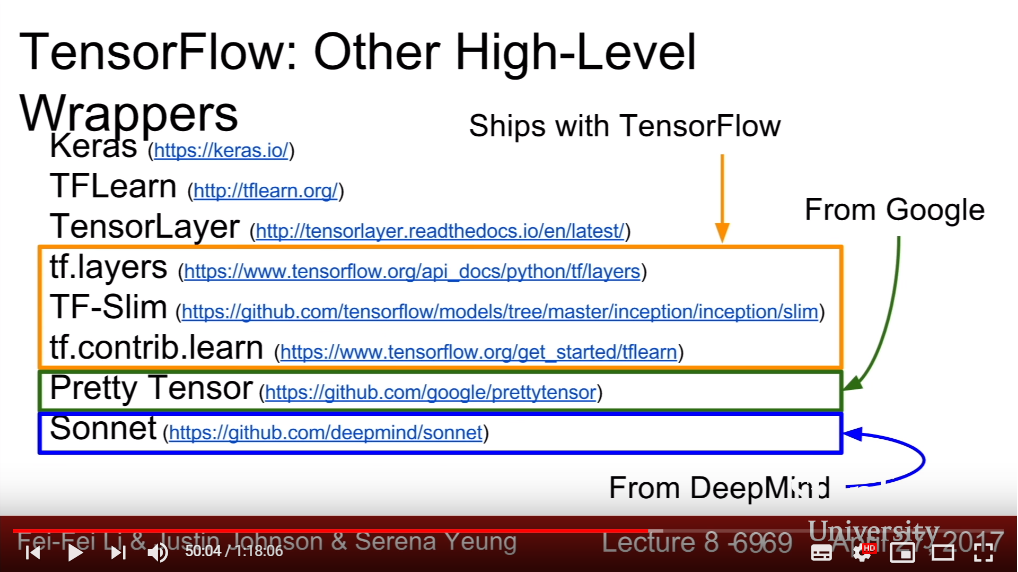

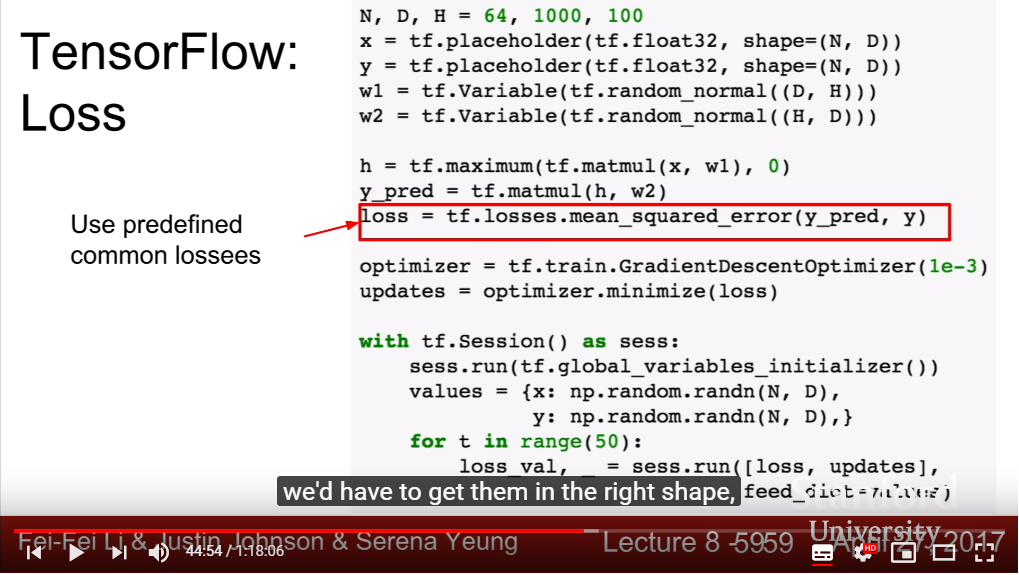

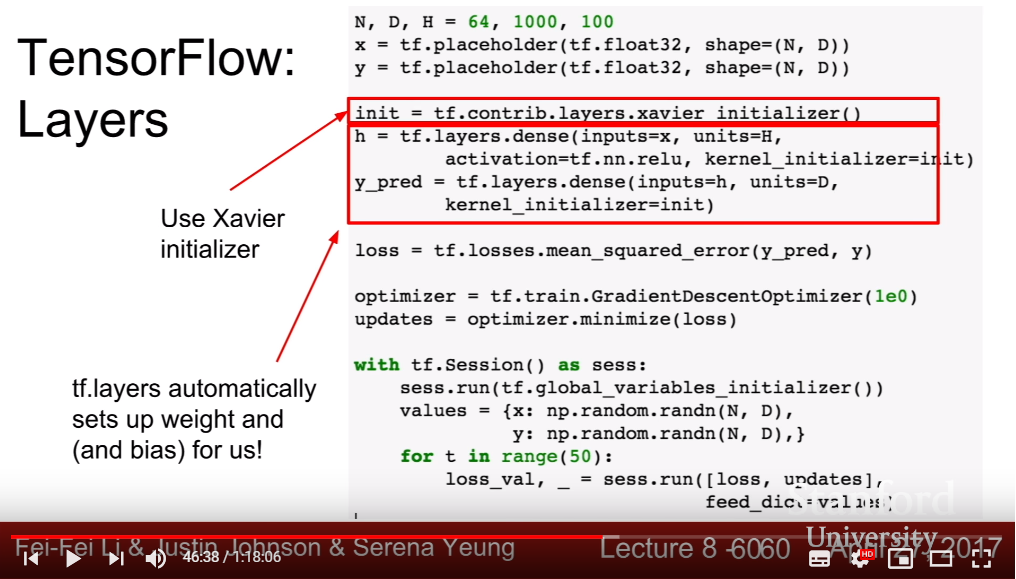

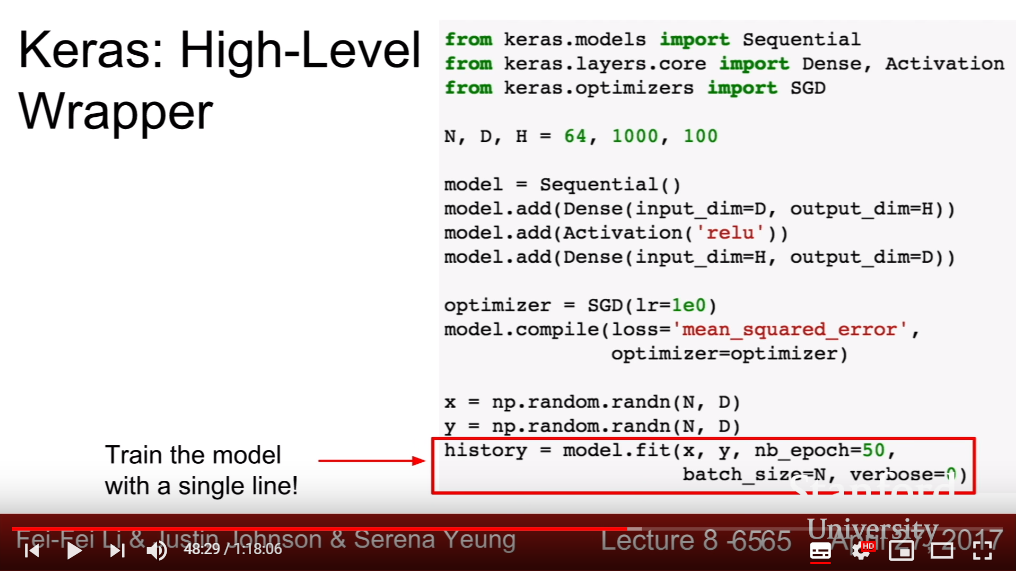

- Abstraction of Tensorflow

- APIS of Tensorflow

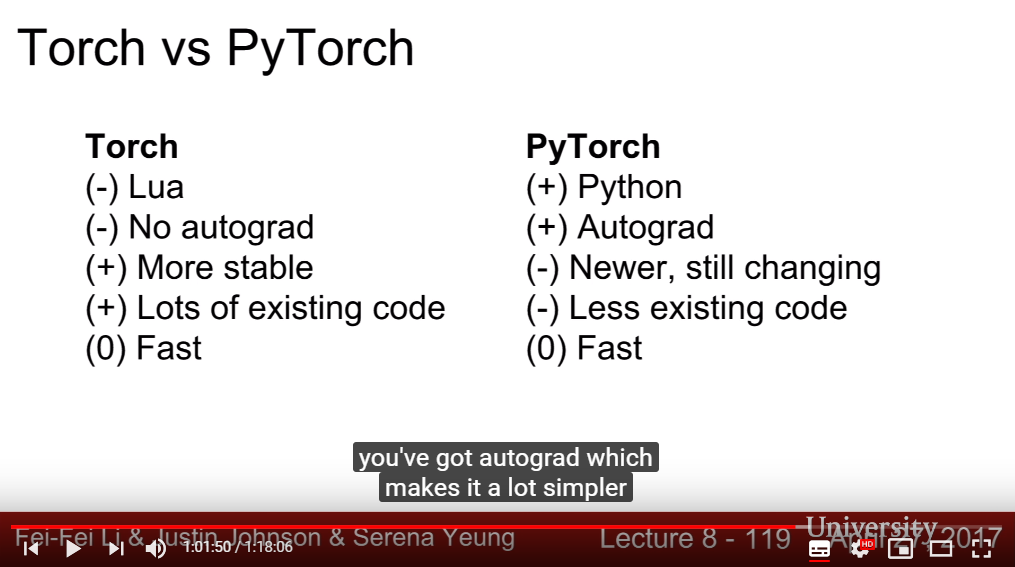

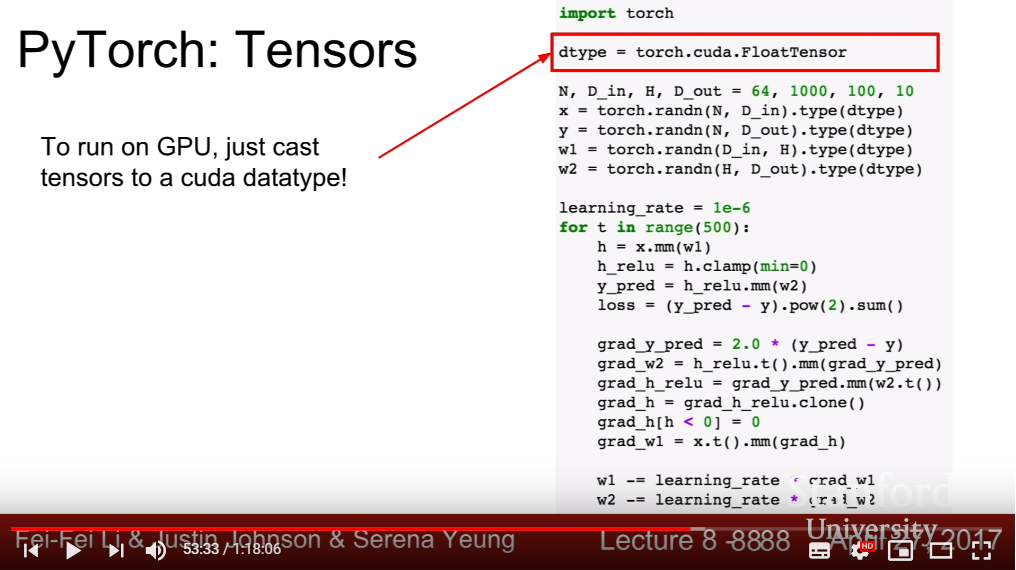

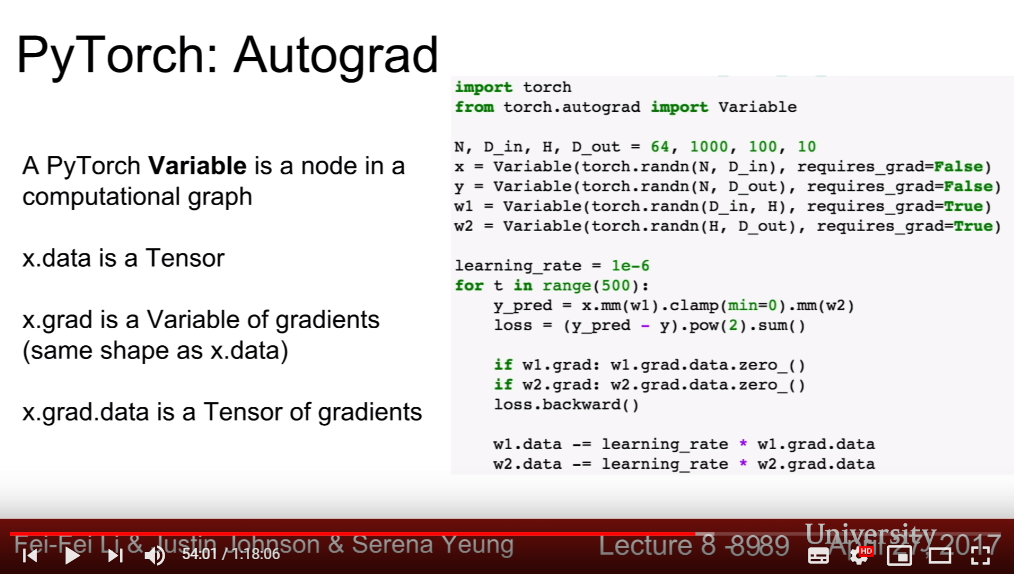

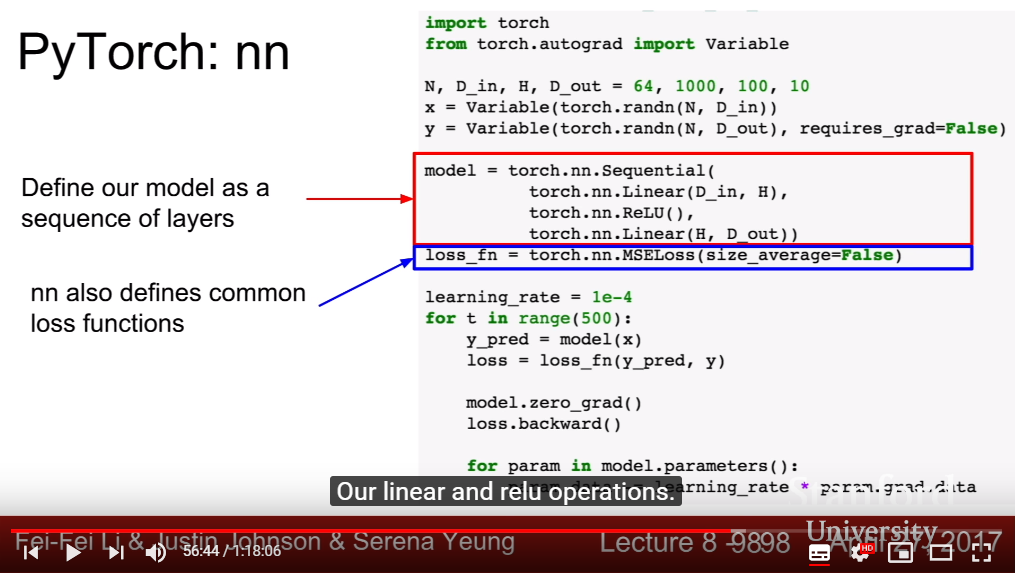

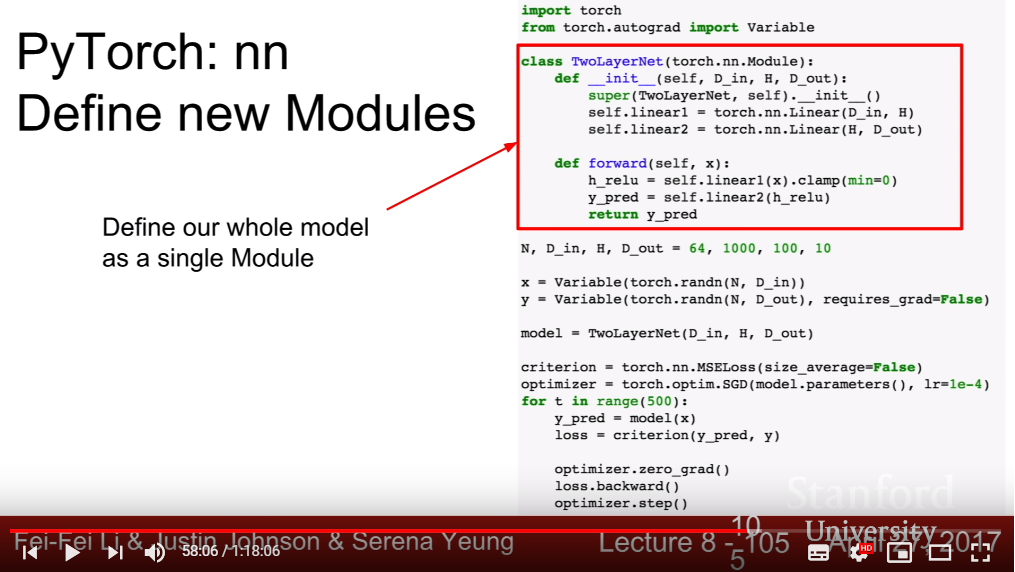

- Abstraction of PyTorch

- Tensor: Imperative array run-able on GPU

- Variable: Node that can store data and gradient

- PyTorch Tensors and Variables have the same API, so we just have to change Tensor to Variable

- Module: Neural network layer that can store state or weight

- APIs of Pytorch

- Pretrained Models: torchvision.models.model(pretrained=True)

- Visdom: logging the code and visualize the work(But, not visualize computational graph structure yet)

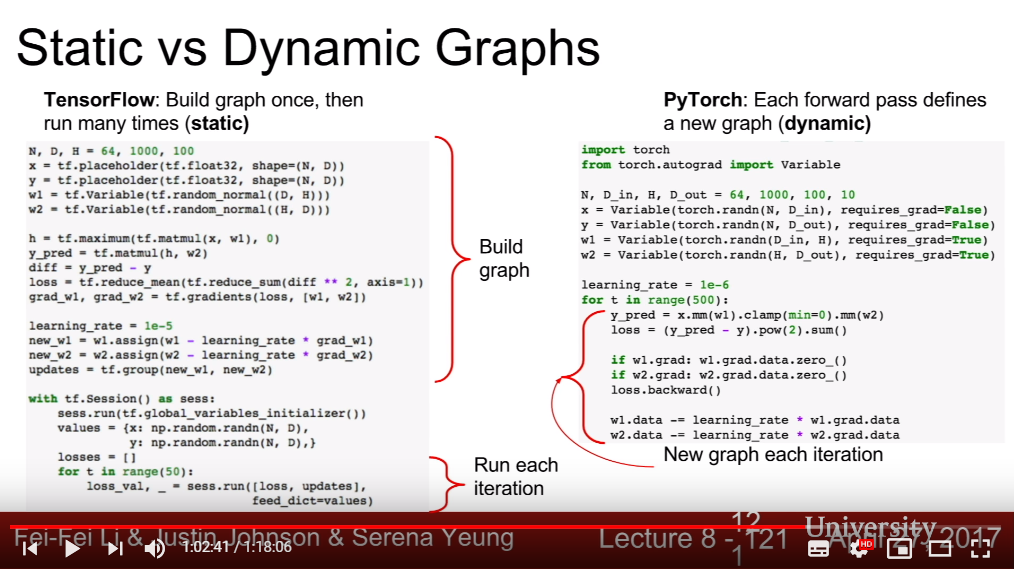

- Advantage of Static Structure

- Framework can optimize the graph before it runs(fuse operation, reordering,applying efficient method)

- Once graph is built, it can be serialized and do not have to rebuild the graph when re-using it(Otherwise, graph building and execution is interwined in dynamic structure)

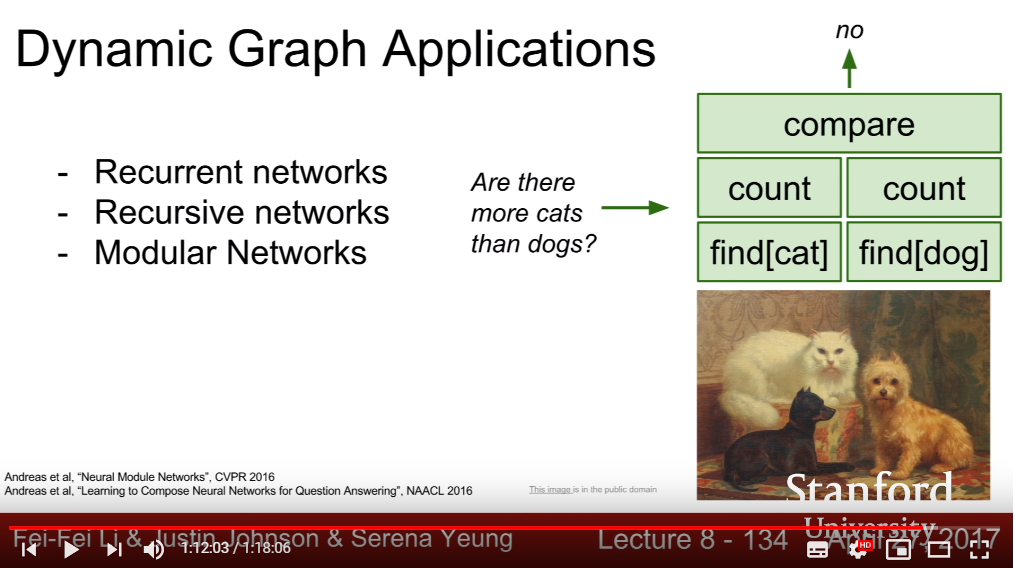

- Advantage of Dynamic Structure

- Code is a lot cleaner and easier in most of scenarios:conditional situation, loops, else(Tensorflow must build its own entire control-flow methods(magic lines) to support all computational graphs)(There are library TensorFlow Fold that makes dynamic graphs easier in TF, using dynamic batch)

- Available in building Recurrent networks, Recursive network, Modular networks

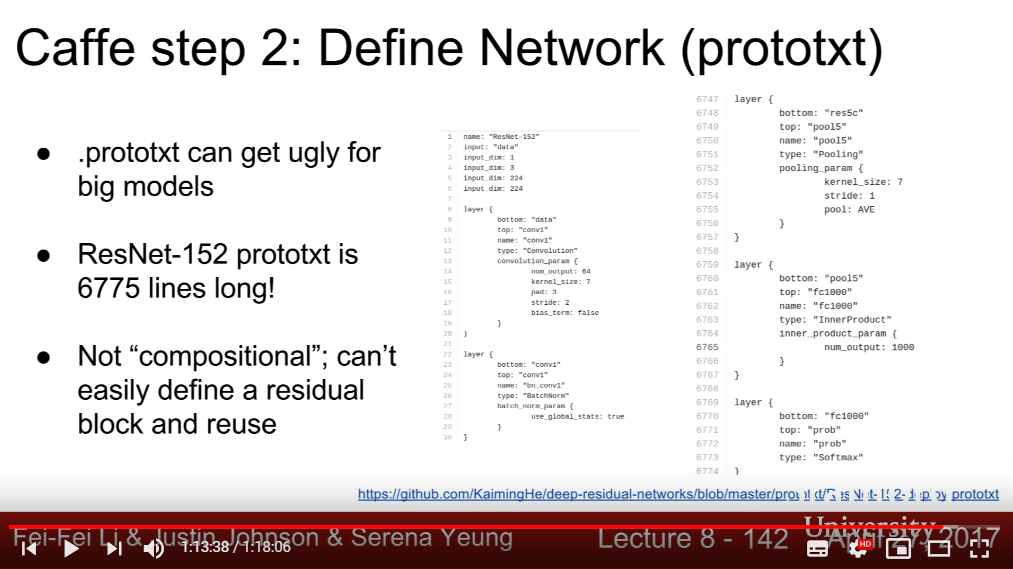

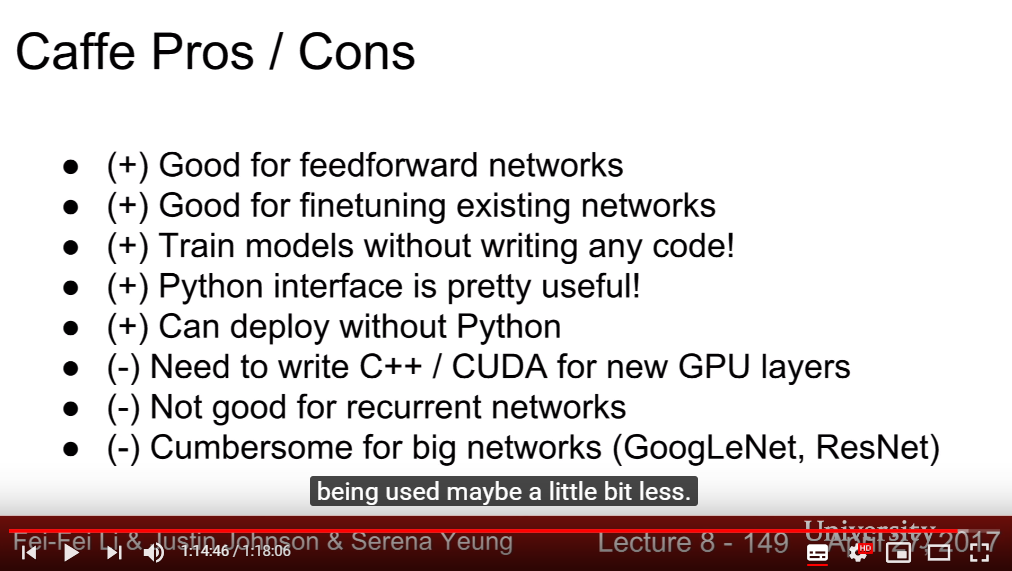

- Google is tring to unify all frameworks as TensorFlow, whereas Facebook is varying Static Structure Framework(Caffe) and Dynamic Structure Framework(PyTorch).